Do Not Tank Your Career with the Agentic AI Hype: Top 10 Security Risks You Must Know

The LLM revolution is here to stay, but don't let the hype blind you to the risks and screw your career!

A no-fluff guide to protecting yourself and your organization from Agentic AI and LLM vulnerabilities

Hey there - Mario here.

If you're like most professionals diving into the LLM gold rush, you're probably excited about the incredible potential of Agentic AI tools like ChatGPT, Claude, and other AI models. But here's the thing: with great power comes great responsibility (and risk).

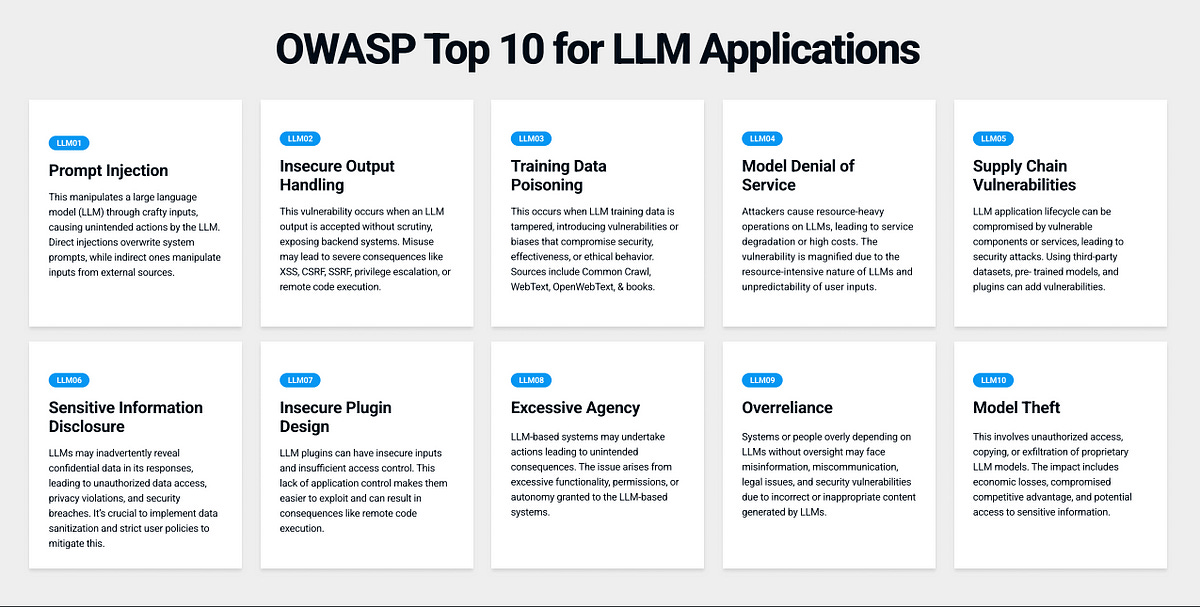

As someone who's been deep in the AI and data privacy space, I want to share the no-nonsense truth about what could actually tank your career when implementing LLMs. Let's break down the OWASP Top 10 LLM Application risks for 2025 and what you need to watch out for.

The Stakes Are Higher Than You Think

Before we dive in, let me be clear: LLM security isn't just another IT checkbox. We're talking about vulnerabilities that could expose sensitive company data, create legal liabilities, and yes - potentially end careers 1.

The Top 10 Career-Ending LLM Risks

1. Prompt Injection

Think SQL injection, but for LLMs. Attackers can manipulate your model's behavior through carefully crafted inputs, potentially exposing sensitive information or bypassing security controls.

2. Sensitive Information Disclosure

Your LLM could be leaking confidential data without you even realizing it. This includes everything from PII to proprietary algorithms.

3. Supply Chain Vulnerabilities

Those third-party models and data sources you're using? They could be compromised, introducing backdoors into your system.

4. Data and Model Poisoning

Bad actors can corrupt your training data or models, leading to biased outputs or security breaches.

5. Improper Output Handling

Without proper validation, your LLM's outputs could contain malicious code or sensitive information.

The Hidden Risks

6. Excessive Agency

Giving your LLM too much autonomy can lead to unauthorized actions and security breaches.

7. System Prompt Leakage

Your model's instructions could be exposed, revealing sensitive information about your system's architecture.

8. Vector/Embedding Weaknesses

Vulnerabilities in how your LLM processes and stores data can be exploited.

9. Misinformation Risks

Your LLM could generate false or misleading information, leading to serious business consequences.

10. Unbounded Consumption

Resource exploitation can lead to massive costs and system failures.

What You Need to Do Now

Implement strict input validation

Establish clear governance frameworks

Train your team on LLM security. Check out the OSWASP LLM AI Cybersecurity & Governance Checklist.

Regular security audits

The Bottom Line

The LLM revolution is here to stay, but don't let the hype blind you to the risks. As I outline in my book "AI Data Privacy and Protection," the key is building security into your AI systems from the ground up 2.

Ready to Dive Deeper?

Subscribe to get reguar insights as I share my knowledge and curated expertise on:

Practical LLM security implementations

Real-world case studies

Latest security threats and mitigation strategies

Expert interviews and analysis

Remember: In the AI gold rush, the ones who succeed aren't just those who move fast - they're the ones who move smart.What's your biggest concern about LLM security? Let me know in the comments below.

Stay safe,

Mario