1. Prompt Chaining - Building Step-by-Step AI Workflows

Prompt Chaining is the Assembly Line for AI. Build complex results from simple, specialized steps.

Prompt Chaining — Agentic Design Pattern Series

Prompt Chaining is a foundational pattern where you link multiple LLM calls together, using the output of one step as the input for the next, to create sophisticated, multi-step workflows.

The Assembly Line for AI. Build complex results from simple, specialized steps.

This pattern is your starting point for moving beyond single-prompt toys to building reliable AI-powered automations. By breaking down a complex task (like "research and write a report") into a sequence of smaller, more manageable sub-tasks ("find sources," "extract key points," "draft the report," "format the output"), you dramatically increase the quality and reliability of the final result. For a business, this means turning a 50%-reliable AI feature into a 95%-reliable one.

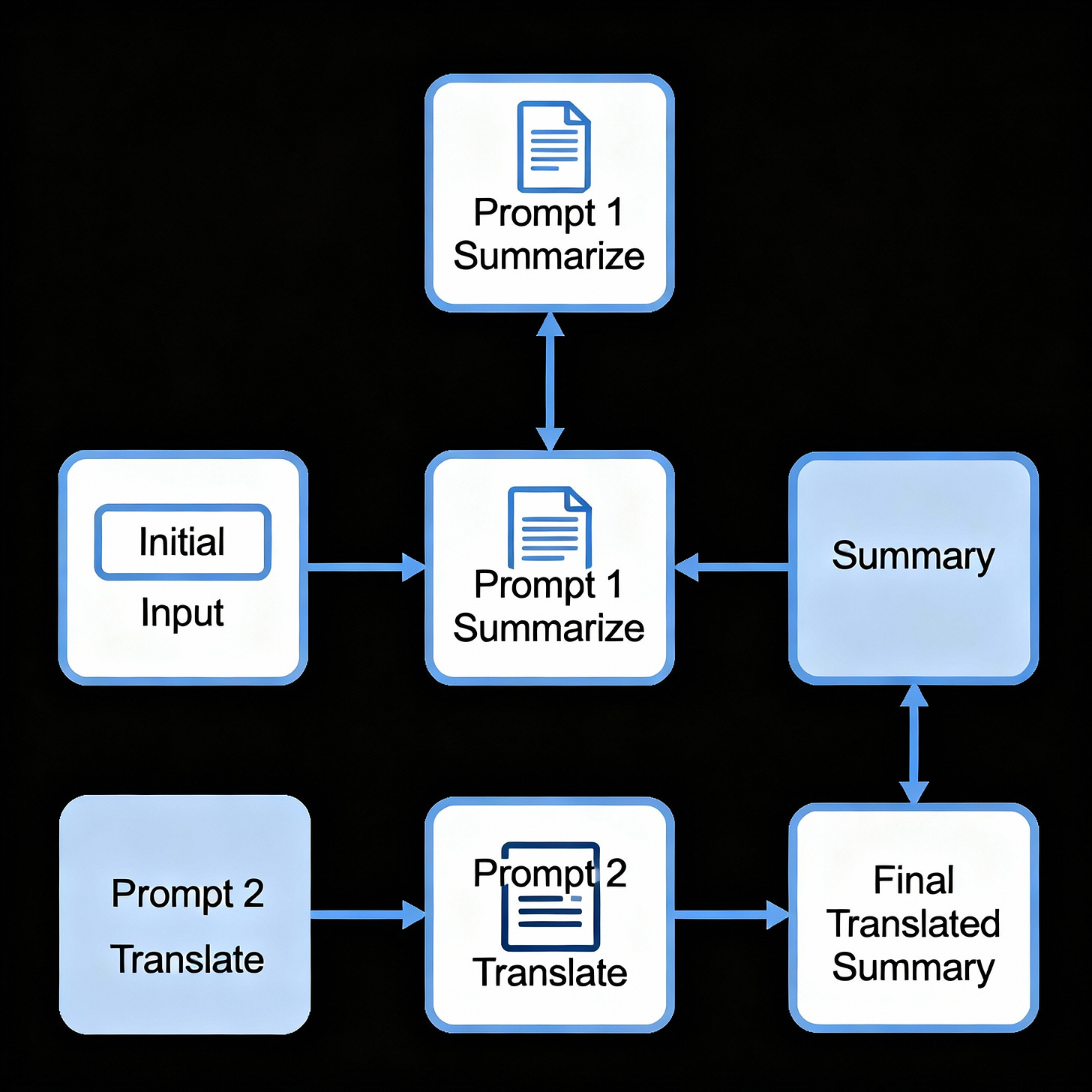

📺 Diagram and Videos

Sequential Chain with LangChain (This video provides a great conceptual overview and code examples for sequential chains).

No Code Implementation of Chains (A clear, concise explanation of the core concept).

🚩 What Is Prompt Chaining?

"The art of advanced prompting isn't about crafting one perfect, monolithic prompt. It's about knowing how to break a problem down and build a 'prompt assembly line' where each station does one thing perfectly."

Prompt Chaining is the technique of creating a sequence of LLM calls where the output of one call becomes the direct input for the next. This creates a logical, step-by-step workflow. The intended outcome is to achieve a complex or high-quality result that would be difficult or unreliable to obtain with a single, massive prompt.

🏗 Use Cases

Scenario: A marketing team needs to repurpose a long, technical whitepaper into a short, engaging Twitter thread. Using a single prompt like "Turn this 10-page paper into a Twitter thread" often yields generic or inaccurate results.

Applying the Pattern:

Step 1 (Summarize): An initial prompt extracts the 3-5 most critical takeaways from the whitepaper.

Step 2 (Re-Angle for Audience): The output (the key takeaways) is fed into a second prompt that rewrites them in a punchy, non-technical tone suitable for a general audience on Twitter.

Step 3 (Format as Thread): The rewritten points are then passed to a final prompt that formats them into a numbered Twitter thread, adding relevant hashtags and a call-to-action.

Outcome: The final Twitter thread is high-quality, accurate, and perfectly formatted, a result achieved reliably every time.

General Use: This pattern is perfect for any multi-step process that must be executed in a specific order.

Summarize-then-Translate: The first prompt summarizes a long article, the second translates that summary into another language.

Extract-then-Format: The first pulls out key data points (names, dates, locations); the second formats them into JSON or a table.

Brainstorm-then-Elaborate: The first creates a list of ideas; the next expands on a selected one.

💻 Sample Code / Pseudocode

This pseudocode in Python demonstrates a simple chain for extracting a topic and then writing an explanation.

Python

def call_llm(prompt):

# In a real application, this would be an API call to an LLM provider.

# For this example, we'll simulate the response.

print(f"--- Calling LLM with prompt: ---\n{prompt[:100]}...\n")

if "Extract the key topic" in prompt:

return "Quantum Computing"

elif "Write a 3-paragraph explanation" in prompt:

return "Quantum computing is a revolutionary field... [full explanation here] ..."

return "Error: Unknown prompt."

def run_summarize_and_explain_chain(long_text):

"""

A simple chain with two steps:

1. Extract the main topic from a long text.

2. Write an explanation of that topic.

"""

# Step 1: First LLM call

prompt_1 = f"Extract the key topic from this text: {long_text}"

topic = call_llm(prompt_1)

print(f"--- Output of Step 1: ---\n{topic}\n")

# Step 2: Second LLM call, using the output from Step 1 as input

prompt_2 = f"Write a 3-paragraph explanation of the topic: {topic}"

explanation = call_llm(prompt_2)

print(f"--- Output of Step 2 (Final Result): ---\n{explanation}\n")

return explanation

# --- Execute the chain ---

initial_input = "A long article discussing the principles of superposition and entanglement..."

run_summarize_and_explain_chain(initial_input)

🟢 Pros

Simplicity: Easy to implement and understand.

Reliability: Breaking tasks into smaller, focused sub-tasks increases the chances of success.

Specialization: Each prompt can be finely optimized for its immediate purpose, improving overall quality.

🔴 Cons

Latency: Sequential steps may lead to slower total response time as each step must wait for the previous one.

Error Propagation: Early mistakes negatively affect all following outputs.

Rigidity: Fixed flows cannot dynamically adapt based on the input.

Token Usage: Context and outputs accumulate, which can result in high token consumption for long chains.

🛑 Anti-Patterns (Mistakes to Avoid)

Overly Long Chains: Avoid chaining more than 4-5 steps without an intermediate summarization or data reduction step. This can lead to a loss of focus and excessive token costs.

Ignoring Step Validation: Never assume the output of a step is correct. If one step fails to produce a valid output (e.g., malformed JSON), the entire chain breaks.

Monolithic Design: Don't build one massive, rigid chain for everything. Design smaller, reusable chains that can be combined.

Unrelated Task Chaining: Don't chain sub-tasks that are not logically dependent. If tasks can be run independently, use the Parallelization pattern instead.

🛠 Best Practices

Validate Between Steps: Always parse and validate the output of each step before passing it to the next. For structured data, use a validation library like Pydantic.

Summarize for Long Chains: If a chain has many steps, include a "summarize context" step periodically to keep the core information without bloating the context window.

Modularize Prompts: Store each prompt as a separate template. This makes them easier to test, version, and reuse across different chains.

🧪 Sample Test Plan

Unit Tests: Test each prompt in the chain individually. Mock the LLM call and provide a known input to the prompt template to ensure it formats correctly.

Python

# Example using pytest for a single prompt template

def test_summarize_prompt():

prompt_template = "Summarize this text: {text}"

formatted_prompt = prompt_template.format(text="This is a test.")

assert formatted_prompt == "Summarize this text: This is a test."

End-to-End (Integration) Tests: Test the entire chain with a set of golden "input/output" pairs. Provide a real input and assert that the final output contains the expected keywords, structure, or information.

Robustness Tests: Actively try to break the chain. Feed it edge-case inputs like empty strings, very long documents, text in a different language, or irrelevant content to see how it handles failures.

Performance Tests: Measure the two most important metrics: latency and token cost. Run the chain 50-100 times with representative inputs and log the average time and tokens consumed to identify bottlenecks.

🤖 LLM as Judge/Evaluator

Recommendation: Use a powerful, separate LLM (like GPT-4 or Gemini 1.5 Pro) as an impartial "judge" to evaluate the quality of your chain's final output.

How to Apply: Create a "scoring prompt" that defines a rubric. Feed it the initial query and the final output of your chain, and ask it to score the result from 1-10 on criteria like

Accuracy,Coherence,Format Adherence, andHelpfulness. This is a powerful way to automate A/B testing between two versions of your chain.

🗂 Cheatsheet

Variant: Summarize-Translate

When to Use: Creating multilingual content from a single source.

Key Tip: Keep the intermediate summary concise and fact-focused to ensure accurate translation.

Variant: Extract-Format

When to Use: When you need structured data (like JSON or CSV) from unstructured text.

Key Tip: Always validate the fields and data types in the final output to catch errors early.

Variant: Brainstorm-Elaborate

When to Use: Ideation, creative writing, and strategic planning.

Key Tip: Use a separate step to rank or filter the brainstormed ideas before elaborating on the best ones.

Relevant Content

LangChain Documentation on Chains: https://python.langchain.com/docs/modules/chains/ (The canonical open-source implementation of this pattern).

Foundational Concept: This pattern is a direct application of the pipeline design pattern in software engineering, adapted for LLM-based workflows.

📅 Next Pattern

Stay tuned for our next article in the series: Design Pattern: Routing — Building Smart AI Workflows That Can Make Decisions.