5. Tool Use — Extending AI's Reach to the Real World

Tool Use Agentic Pattern is Giving Your AI Hands. Connect LLMs to data, APIs, and the real world.

Tool Use — Extending AI's Reach to the Real World

Tool Use is the pattern of giving a Large Language Model the ability to interact with external systems, such as APIs, databases, or code interpreters, to access information and perform actions that go beyond its built-in knowledge.

Give Your AI Hands. Connect LLMs to data, APIs, and the real world.

This pattern transforms a conversational LLM from a pure text-generator into an active agent that can do things. By providing tools, you overcome the LLM's inherent limitations, like its knowledge cut-off date and its inability to perform precise calculations or interact with private data. For a business, this is the key to creating applications that can answer questions about real-time stock prices, look up customer order histories, or even book appointments.

📊 Video and Diagram

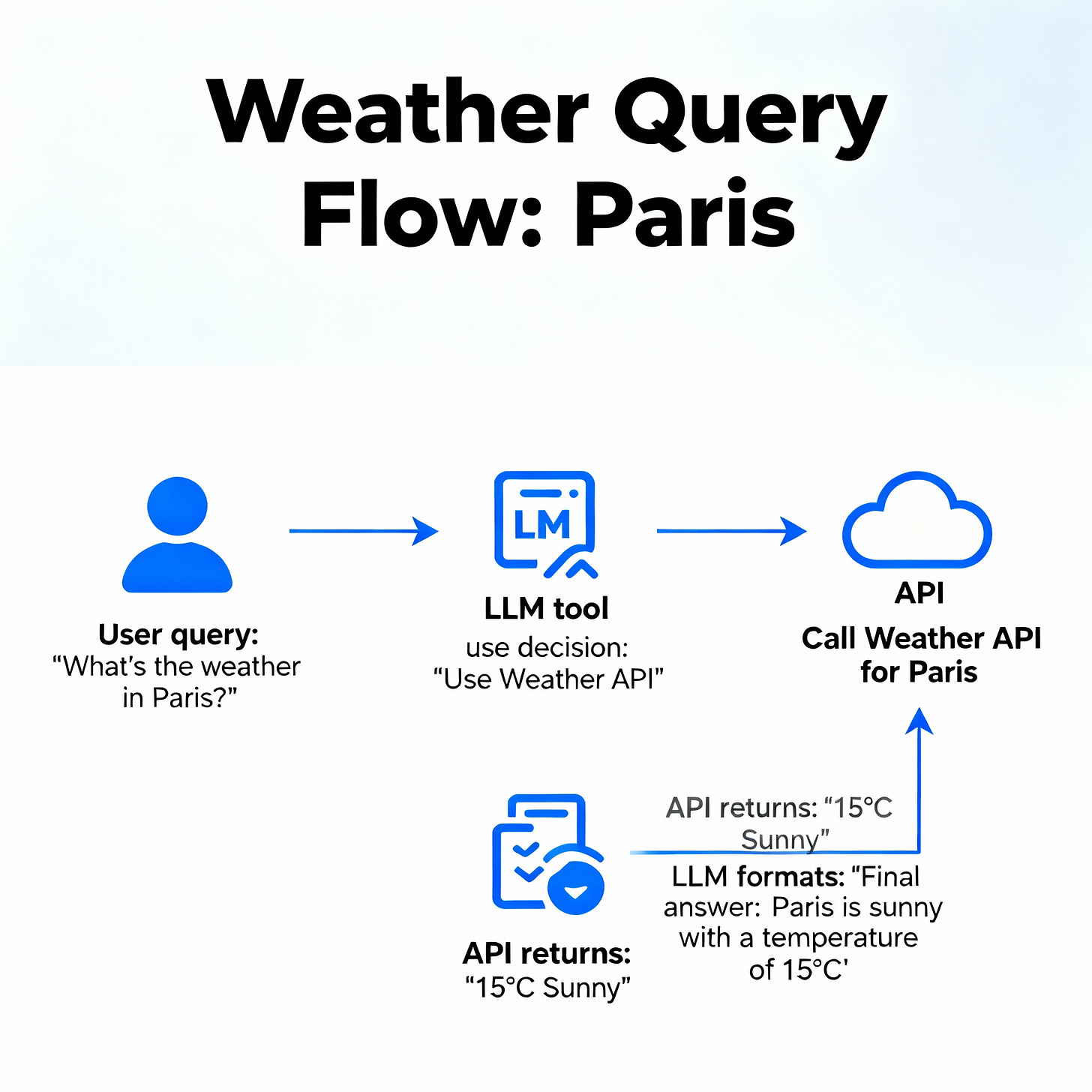

A visual of the Tool Use flow:

Query: "What's the weather in Paris?" -> [LLM Decides to Use Tool] -> Calls Weather API("Paris") -> API Returns "15°C, Sunny" -> [LLM Formats Final Answer] -> "The weather in Paris is 15°C and sunny."

What are LangChain Tools?

YouTube: LangChain Basics Tutorial #2 Tools and Chains by Sam Witteveen

A fantastic beginner-friendly explanation of what tools are in the context of LLM agents and how they grant new capabilities to your applications.

The Rise of LLM-Powered Agents

YouTube: AI Agents (and the Toolformer paper) by Fireship

A fast-paced, high-level overview of how models like Toolformer learn to use external tools, providing the academic background for this powerful pattern.What are LangChain Tools?

🚩 What Is Tool Use?

"An LLM's true power isn't just in what it knows, but in what it can connect to. Tools are the bridges that connect the world of language to the world of data and action."

Tool Use allows an LLM to pause its text generation, call an external piece of code (the "tool") with specific parameters, receive the tool's output, and then resume its generation, incorporating the new information into its final response. The LLM itself decides when to use a tool and which tool to use based on the user's prompt and the descriptions of the available tools.

🏗 Use Cases

Scenario: A travel planning app uses an AI assistant to help users. A user asks, "Find me a flight from New York to London next Tuesday and a hotel there for under $300 a night."

Applying the Pattern:

Step 1 (Tool Selection): The agent's router identifies two distinct needs: a flight and a hotel. It decides to use the

flight_searchtool and thehotel_searchtool.Step 2 (Parallel Tool Calls): The agent calls both tools with the extracted parameters:

flight_search(origin="JFK", destination="LHR", date="next Tuesday")hotel_search(city="London", max_price=300)

Step 3 (Synthesize Results): The agent receives the JSON outputs from both APIs.

Step 4 (Generate Response): The LLM formats the structured data from the tools into a natural, helpful, human-readable paragraph summarizing the best flight and hotel options it found.

Outcome: The assistant provides a real-time, actionable, and accurate answer that would be impossible for an LLM to generate from its static knowledge alone.

General Use: This pattern is crucial for grounding LLMs in reality.

Accessing Real-time Information: Answering questions about current events, stock prices, or weather.

Performing Calculations: Using a calculator or code interpreter for precise math.

Interacting with Private Data: Connecting to a company's internal database to answer "What is the status of my order?"

💻 Sample Code / Pseudocode

This Python pseudocode shows a simplified agent that decides whether to use a calculator tool.

In Python

import json

# --- Tool Definitions ---

class WeatherTool:

def use(self, city: str):

"""Returns the current weather for a given city."""

print(f"--- TOOL: Getting weather for {city} ---")

if city.lower() == "new york":

return json.dumps({"city": "New York", "temp_f": 72, "conditions": "Sunny"})

return json.dumps({"error": "City not found"})

class CalculatorTool:

def use(self, expression: str):

"""Calculates the result of a simple math expression."""

print(f"--- TOOL: Calculating '{expression}' ---")

try:

# Safe eval for simple arithmetic

return str(eval(expression, {"__builtins__": None}, {}))

except:

return "Error: Invalid expression"

# --- Agent Logic ---

class Agent:

def __init__(self):

self.tools = {

"get_weather": {"obj": WeatherTool(), "description": "Finds the current weather in a city."},

"calculator": {"obj": CalculatorTool(), "description": "Solves simple math expressions."},

}

def choose_tool(self, query: str):

"""Simulates an LLM router choosing the best tool."""

print(f"--- ROUTER: Analyzing query '{query}' ---")

if "weather" in query:

return "get_weather", {"city": "New York"} # Dummy argument extraction

elif "calculate" in query or "+" in query or "*" in query:

return "calculator", {"expression": "100 + 50"} # Dummy argument extraction

return None, None

def run(self, query: str):

tool_name, tool_args = self.choose_tool(query)

if not tool_name:

# Simulate LLM answering directly

return "I'm not sure which tool to use, but I can try to answer directly."

print(f"--- ROUTER: Chose tool '{tool_name}' with args {tool_args} ---\n")

tool = self.tools[tool_name]["obj"]

tool_result = tool.use(**tool_args)

# Simulate LLM synthesizing the final answer

print(f"\n--- SYNTHESIZER: Got tool result: {tool_result} ---")

final_answer = f"Based on your query, I used the {tool_name} tool and got this result: {tool_result}"

return final_answer

# --- Execute the workflow ---

agent = Agent()

result = agent.run("What's the weather like in New York?")

print("\n--- FINAL ANSWER ---")

print(result)

print("\n" + "="*40 + "\n")

result = agent.run("Can you calculate 100 + 50 for me?")

print("\n--- FINAL ANSWER ---")

print(result)🟢 Pros

Extends Capabilities: Allows LLMs to overcome their inherent limitations (e.g., knowledge cutoffs, mathematical inability, lack of access to private data).

Increases Accuracy: Grounds responses in factual, verifiable data from reliable sources instead of relying on the model's parametric memory.

Enables Action: Transforms a text generator into an agent that can perform real-world tasks like sending emails, booking appointments, or managing files.

🔴 Cons

Complexity: Requires careful design of tool specifications, input parsing, output handling, and robust error management.

Latency: Calling external tools, especially over a network, introduces significant delays in the total response time.

Security & Safety: Tools that perform actions must be carefully secured with permissions and user confirmations to prevent unintended or malicious use.

🛑 Anti-Patterns (Mistakes to Avoid)

Poor Tool Descriptions: The LLM relies entirely on the tool's text description to know when to use it. A vague description like "my_function" is useless. A good description is "calculates the square root of a positive integer."

Chatty Tool Outputs: Tools should return raw, structured data (like JSON or a simple string), not conversational sentences. It is the LLM's job to turn the tool's data into a conversational response.

Ignoring Tool Errors: Your agent must have a robust way to handle cases where a tool fails, times out, or returns an error, rather than crashing or returning a nonsensical answer.

🛠 Best Practices

Make Tools Atomic: Each tool should do one specific thing and do it well. Instead of a generic company_database tool, create specific, secure tools like get_order_status_by_id and find_customer_by_email.

Provide Usage Examples: In the prompt that defines the tools for the LLM, include one or two examples of how to call each tool correctly (a technique known as few-shot prompting).

Implement a Timeout: External API calls can sometimes hang or take too long. Always implement a timeout to prevent your agent from getting stuck and becoming unresponsive.

🧪 Sample Test Plan

Unit Tests: Test each tool function completely independently of the LLM. Use a testing framework to ensure it handles valid inputs, invalid inputs, and edge cases correctly.

End-to-End (Integration) Tests: Test the full loop: query -> LLM selects tool -> tool runs -> LLM synthesizes response. Verify the final answer is correct and well-formed.

Robustness Tests: Feed the agent ambiguous queries or queries designed to trick it into using the wrong tool. This helps you identify weaknesses in your tool descriptions.

Performance Tests: Measure the latency added by each tool call. Monitor API rate limits to ensure your agent doesn't get throttled by the services it depends on.

🤖 LLM as Judge/Evaluator

Recommendation: Use a judge LLM to evaluate both the agent's tool selection and its final answer.

How to Apply: Create a two-step evaluation prompt. First, show the judge the user query and the chosen tool and ask, "Was this the right tool to use for this query? Answer YES or NO and explain why." Second, show the query, the tool's raw data output, and the agent's final answer, and ask, "Does this answer accurately and helpfully incorporate the data from the tool? Score from 1-10."

🗂 Cheatsheet

Variant: Retrieval Augmented Generation (RAG)

When to Use: To answer questions from a specific, private knowledge base (e.g., your company's internal documents or website content).

Key Tip: The "tool" is a vector database search. The agent retrieves relevant text chunks and uses them as context to formulate a grounded answer.

Variant: Code Interpreter

When to Use: For complex math, data analysis, or logic problems that are better solved with code than with language.

Key Tip: The tool is a secure Python execution environment (a sandbox). This is one of the most powerful but also riskiest tools; ensure the execution environment is isolated and has no unintended permissions.

Relevant Content

Toolformer Paper (arXiv:2302.04761): https://arxiv.org/abs/2302.04761 The groundbreaking paper from Meta AI showing how LLMs can be taught to use external tools through self-supervised learning.

LangChain Documentation on Tools: https://python.langchain.com/docs/modules/agents/tools/ The definitive guide for implementing tools within the LangChain framework, including many pre-built tool integrations.

Hugging Face Transformers Agent: https://huggingface.co/docs/transformers/en/agents An alternative framework for building tool-using agents, leveraging the extensive Hugging Face ecosystem of models and tools.

📅 Coming Soon

Stay tuned for our next article in the series: Design Pattern: Planning — How to Decompose Big Problems into Solvable Steps.