2. Routing — Building Smart AI Workflows That Can Make Decisions

Routing Design Pattern - The Brain of Your AI. Stop building one-trick ponies; build agents that can think and choose.

Routing — Agentic Design Pattern Series

Routing is the decision-making pattern that allows an AI agent to dynamically select the best tool, prompt, or workflow based on the user's query, transforming a simple linear process into an intelligent and efficient system.

The Brain of Your AI. Stop building one-trick ponies; build agents that can think and choose.

If Prompt Chaining is the assembly line, Routing is the factory's central command. Instead of forcing every task down the same path, this pattern allows your AI to analyze a request and intelligently direct it to the right specialist. For businesses, this translates to huge efficiency gains by not wasting time or API calls on unnecessary steps. It’s the difference between a chatbot that can only answer one type of question and one that can expertly handle sales, support, and technical queries.

📊 Video and Diagram

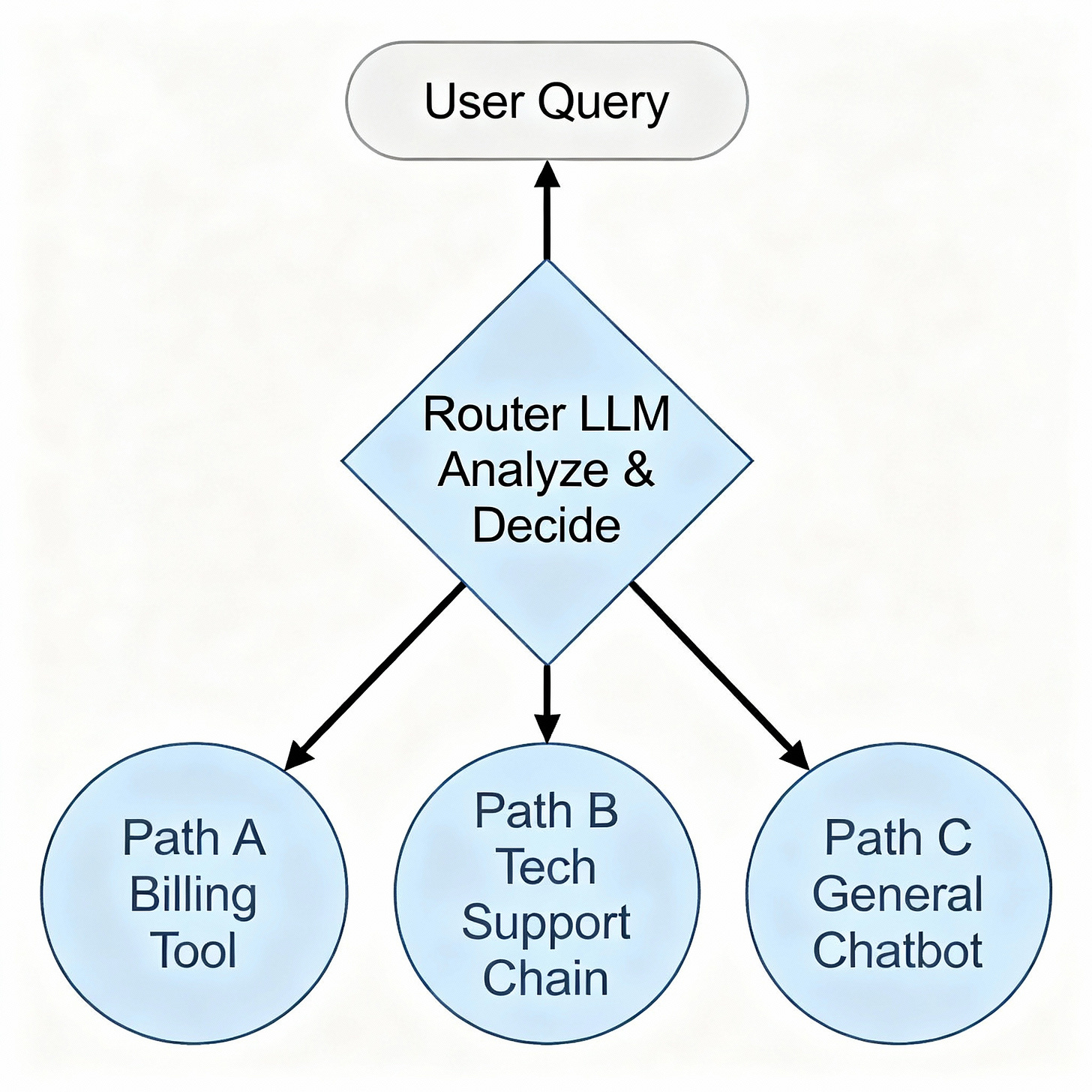

A visual of the decision-making flow:

LangChain Crash Course: Router Chains

YouTube: Router Chains | LangChain Crash Course by Patrick Loeber

An excellent, code-focused walkthrough by Patrick Loeber. This tutorial covers how to implement router chains in LangChain, showing how to dynamically select tools or workflows based on query intent. Ideal for anyone looking to add intelligent decision-making to LLM applications.

🚩 What Is Routing?

"The goal is not to build a model that knows everything, but to build a system that knows where to go to get the answer. That's intelligence, and routing is how we achieve it."

Routing uses a dedicated LLM call to act as a classifier or decision-maker. This "router" analyzes the user's input and, based on a set of predefined options, selects the most appropriate downstream path. This allows the agent to handle a wide variety of tasks by directing each one to a specialized tool or chain designed to solve it perfectly.

🏗 Use Cases

Scenario: A financial services company wants to build a single AI assistant for its customers. User queries can range from "What's my account balance?" to "What are your predictions for the stock market this quarter?" to "How do I reset my password?"

Applying the Pattern:

Incoming Query: A user asks, "My card was declined, can you tell me why and also what the S&P 500 is trading at?"

Routing Step: A router LLM analyzes this ambiguous, multi-intent query. It's prompted to identify the distinct tasks required.

Decision & Dispatch: The router determines two paths are needed:

Path A (Tool Use): The "card declined" part is routed to a secure, internal

check_transaction_statusAPI.Path B (Tool Use): The "S&P 500" part is routed to a real-time

get_stock_priceAPI.

Aggregation: The results from both paths are combined into a single, coherent answer for the user.

Outcome: The assistant efficiently handles a complex query by dispatching the right sub-task to the right tool, providing a fast and accurate response that would be impossible with a single prompt.

General Use: This pattern is essential whenever an agent has multiple tools or workflows and needs to choose the correct one.

Customer Support Bots: Routing queries to billing, technical support, or human escalation paths.

Multi-Tool Agents: Deciding whether to use a web search, a calculator, or a code interpreter.

Question-Answering Systems: Choosing between a technical knowledge base, a user database, or a general LLM for creative questions.

💻 Sample Code / Pseudocode

This Python pseudocode shows a simple router that decides between two different "specialist" chains.

In Python

def call_llm(prompt):

# Simulates an LLM API call.

print(f"--- Calling LLM with prompt: ---\n{prompt[:100]}...\n")

if "['math', 'general']" in prompt: # This is our router prompt

if "calculate" in prompt.lower() or "what is" in prompt.lower():

return "math"

else:

return "general"

elif "math_expert" in prompt:

return "The answer is 42."

elif "creative_writer" in prompt:

return "Once upon a time, in a land of code..."

return "Error: Unknown prompt."

def router(query):

"""

Analyzes the query and returns the name of the best chain to use.

"""

available_chains = ['math', 'general']

router_prompt = f"""

Given the user query: "{query}"

Which of the following chains is the best one to use?

Chains: {available_chains}

Return only the name of the best chain.

"""

chosen_chain = call_llm(router_prompt).strip()

print(f"--- Router decided: '{chosen_chain}' ---\n")

return chosen_chain

def run_agentic_workflow(query):

"""

Routes the query to the correct specialist chain and executes it.

"""

chain_name = router(query)

if chain_name == "math":

# Execute the math specialist chain

math_prompt = f"You are a math_expert. Answer this: {query}"

result = call_llm(math_prompt)

elif chain_name == "general":

# Execute the creative writing chain

general_prompt = f"You are a creative_writer. Respond to this: {query}"

result = call_llm(general_prompt)

else:

result = "Sorry, I don't know how to handle that request."

print(f"--- Final Result: ---\n{result}")

return result

# --- Execute the workflow ---

run_agentic_workflow("Calculate 6 times 7.")

print("\n" + "="*20 + "\n")

run_agentic_workflow("Tell me a short story.")

🟢 Pros

Efficiency: Saves time and money by avoiding unnecessary LLM calls or tool usage.

Flexibility & Scalability: Easily add new tools or skills by simply adding a new route.

Improved Accuracy: Directing a query to a specialized tool or a finely-tuned prompt chain yields much higher quality results.

🔴 Cons

Central Point of Failure: The entire system's performance hinges on the router making the correct choice. A bad routing decision leads to a failed outcome.

Ambiguity: The router can struggle with vague or multi-intent queries that don't fit neatly into one category.

Prompt Engineering: Crafting a reliable and robust router prompt is a non-trivial engineering task.

🛑 Anti-Patterns (Mistakes to Avoid)

Vague Route Descriptions: The router prompt must contain clear, distinct, and descriptive names for each route.

chain_1andchain_2are bad names;billing_inquiryandtechnical_support_docsare good names.Not Having a Default/Fallback: If the router is uncertain or no route matches, it should have a default path (e.g., "I'm sorry, I'm not sure how to help with that") instead of guessing incorrectly.

Overloading the Router: Don't ask the router to both classify the query and answer it. Its only job is to choose the next step.

Forgetting to Update the Router: When you add a new tool or chain, you must also update the router's prompt to make it aware of the new option.

🛠 Best Practices

Use Few-Shot Prompting: Provide 2-3 examples of queries and their correct routes directly in the router's prompt to improve its accuracy.

Keep the Router Lightweight: Use a fast and cheap model for the routing step. The heavy lifting should be done by the specialist chains.

Iterate on Descriptions: The quality of your route descriptions is paramount. Continuously refine them based on where the router makes mistakes.

🧪 Sample Test Plan

Unit Tests: The most important unit test for a router is a classification test. Create a dataset of 50-100 example queries and their "correct" route. Run each query through the router and assert that it picks the right one.

Python

# Example using pytest for router classification

def test_router_choices():

test_cases = [

("What is 2+2?", "math"),

("Who won the world series in 2022?", "web_search"),

("Write a poem.", "creative")

]

for query, expected_route in test_cases:

assert router(query) == expected_route

End-to-End (Integration) Tests: Test the full workflow. Provide an input and check that the final output is what you'd expect from the specialist chain that should have been chosen.

Robustness Tests: Feed the router ambiguous queries that could plausibly fit multiple routes and see how it behaves. This helps you identify where your route descriptions need more clarity.

Performance Tests: Measure the latency of the routing step itself. It should be very fast. If it's slow, your router model may be too large.

🤖 LLM as Judge/Evaluator

Recommendation: Use a powerful LLM to specifically evaluate the decision made by your router, not the final output.

How to Apply: Create a scoring prompt that shows the judge LLM the original query and the route that your router chose. Ask a simple question: "Was this the correct choice? Answer YES or NO, and explain why." Run this over your test dataset to quickly find and analyze routing errors.

🗂 Cheatsheet

Variant: Intent-Based Routing

When to Use: Standard use case for chatbots and agents. Classifies the user's goal.

Key Tip: Start descriptions with action verbs (e.g.,

Calculate_Math,Search_Web,Answer_User_History).

Variant: Tool-Selection Routing

When to Use: When an agent has a set of APIs it can call.

Key Tip: Ensure tool descriptions clearly state the exact inputs the tool requires and what it returns.

Variant: Fallback Routing

When to Use: To handle uncertainty and prevent errors.

Key Tip: Always include a "default" or "fallback" route for queries that don't match any other option.

Relevant Content

LangChain Documentation on Routing: https://python.langchain.com/docs/expression_language/how_to/routing (Provides code and concepts for several routing methods).

MRKL Systems Paper (arXiv:2205.00445): https://arxiv.org/abs/2205.00445 (A foundational academic paper that proposes a neuro-symbolic architecture with a "router" that selects which "expert" tool to use).

LinkedIn Article: LLM Routing: AI Costs Optimisation Without Sacrificing Quality

A clear introduction to LLM routing strategies: explains how dynamically assigning each prompt to the right LLM or tool (based on the query's needs) reduces costs, improves efficiency, and ensures high-quality answers. Perfect for product managers and engineers exploring scalable AI designs.

📅 Coming Soon

Stay tuned for our next article in the series: Design Pattern: Parallelization — Supercharging Your AI's Speed by Running Tasks in Parallel.